Problem

Recently I needed to migrate opportunities from our legacy system into Salesforce. Being no stranger to the data loader, this is normally not a problem. The tricky part was that the opportunity needed to be linked to an external ID field on the account instead of the account ID, which cannot be accomplished with the data loader.

Solution

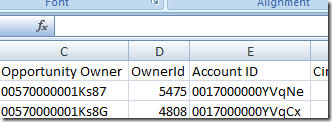

1. Run a report that contains the external id field (Enrollment Owner ID) and Account ID (filtering out where enrollment IDs are not present).

Export the report.

2. Load my Salesforce Find and Replace Template (Excel 2007 required – may work w/2003 & compatibility pack).

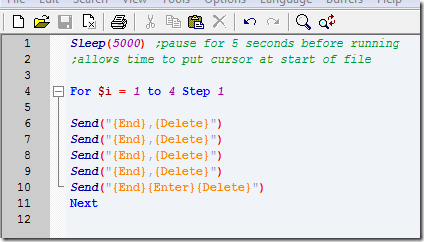

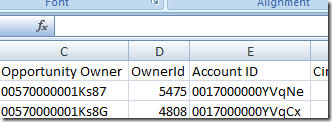

Paste in the results of the above report into the left two columns, starting in the yellow section (in this case, the order of the fields needed to be reversed).

Then paste in the External IDs that you are looking to match in the clear column on the right. Select the Salesforce ID formula (E5) and double click the bottom right plus sign to extend the formula throughout the entire worksheet.

Column E will now output the corresponding ID from column B, when fields D and A match. The matching algorithm will only find one match, so it is best to have a duplicate free list of external IDs.

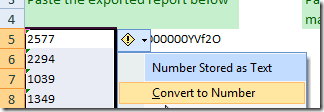

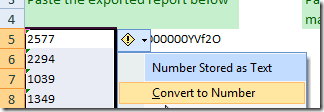

You may need to convert the fields to numeric or text values depending on your external ID. For this function to work correctly for me, I had to convert these to numbers:

3. Take the matched Salesforce IDs paste them into a new column in your import file.

A quick paste into notepad, select all, cut, and into the worksheet it goes (to remove excel formatting), nicely matched.

Credit to Mr Excel for the formula magic.

Update

Before I got the chance to finish this post, I needed to have this template match a data field as well. I used this additional field and a formula to add a picklist selection to a multipicklist field with existing data (without this formula, the data loader would override the existing selections).

In case you’re curious, the formula in H4 is =CONCATENATE(G4,"; Weekly Mailing").

Click here to download the template.

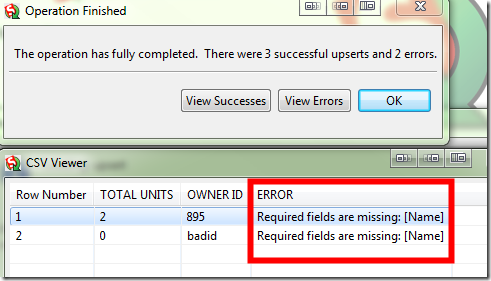

Disclaimer: use at your own risk. Be very careful playing with the data loader. Spot check your data and make sure to do a test run of a few records before proceeding with a large data set.